Algorithmic Bias in Facial Recognition" />

Algorithmic Bias in Facial Recognition" />

As a biometric company, Keyo doesn’t support current facial recognition technology and practices in any capacity. We’ve written previous posts on its violation of privacy and highlighted its dangers on social media , as its failings have popped up in the press.

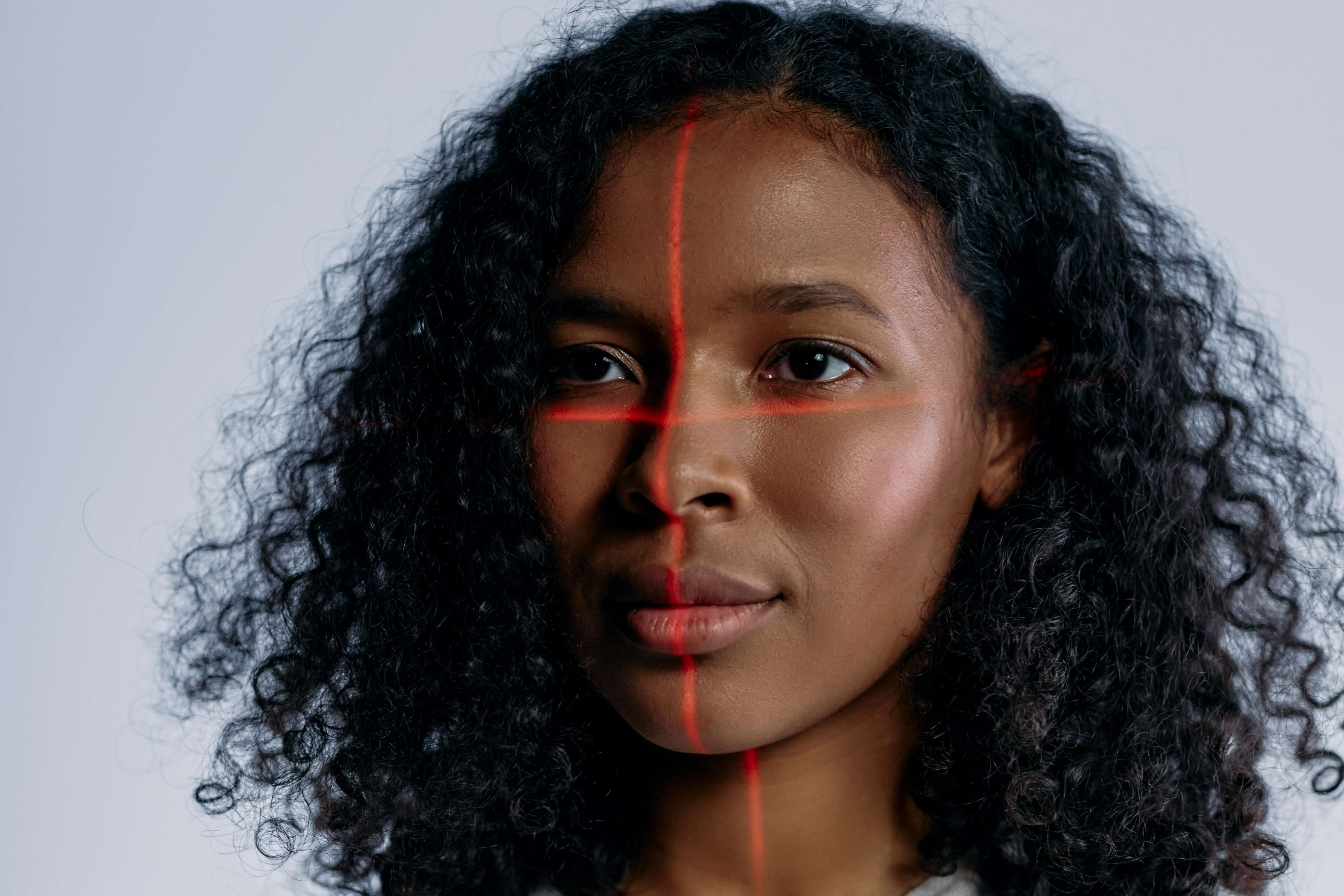

There are more than a few reasons to object to facial recognition until it has been improved and there are federal regulations put in place. One of the most harmful effects facial recognition can wreak on a society is its algorithmic bias against certain demographics of people, widening preexisting gaps of inequity, and perpetuating discrimination that previous activists have sought to eradicate.

What is facial recognition?

It’s a biometric technology that identifies and verifies a person’s identity by mapping out the data points of a person’s facial features. It uses A.I. algorithms to learn how to identify a person, verify them against a single image in a database, and identify a person against multiple images.

How does an A.I. machine learning algorithm work?

Cathy O’Neil, data scientist, mathematician, activist and author of NYT bestselling book Weapons of Math Destruction, describes the components of an algorithm this way:

-

A specific, chosen set of data

-

A chosen definition of success

An A.I. machine learning algorithm takes a set of data, learns what situation, pattern or occurrence leads to the chosen outcome of success ( or failure), and then applies this knowledge to other sets of data.

O’Neil explained in a Ted Talk that, “algorithms are opinions embedded in code. They repeat past practices and patterns, and automate the status quo.” Later on, you’ll see why this particular feature of algorithms can have detrimental effects on people’s lives.

“Data is destiny”

Computer scientist and ethical A.I. activist Joy Buolamwini first discovered the limitations of facial recognition while doing a project during her graduate work at MIT. The facial recognition software she used failed to recognize her face. Buolamwini, a Ghanian-American, decided to experiment with the software by putting a white mask over her own face: the software instantly recognized her new “face.”

This moment catapulted Buolamwini into the underbelly of how algorithms in facial recognition technology failed to recognize and accurately identify dark-skinned people and women, shaping her graduate work and the future of facial recognition in America.

She explains in her documentary Coded Bias, that “data is destiny because it teaches A.I. If you have skewed data, you have skewed algorithms.” To go further, if you have skewed algorithms, you have skewed identification and verification.

How the bias occurs

Recall what we discussed above: you need a chosen or “training” set of data to teach A.I. what you want it to do. What has been discovered time and again through different research, is that the people creating algorithms used in facial recognition are using a very specific set of data: the majority of images used are white men. As a result, these algorithms are trained to accurately identify white men, but fail to accurately identify women and people of color.

For example, the ACLU ran an experiment where they used Amazon’s facial recognition software, Rekognition, to compare members of Congress’s photos against a database of people who’ve been arrested. The results: there were 28 false matches, where Rekognition identified members of Congress as the same people from the arrest images. The majority of the false matches were people of color.

The current use of facial recognition

Below are just a few examples of the technology’s invisible presence in our daily lives.

Law Enforcement

According to a report by The Atlantic, U.S. law enforcement started using facial recognition in the early 2000s. Many law enforcement departments use Clearview AI’s facial recognition software. The company scrapes images of U.S. citizens from the internet and social media without anyone’s consent, for their database. If your image is on the internet, you can be identified by this company in an instant. However, the CEO himself admits their technology is only about 75% accurate.

Employment

HireVue is a company that developed facial recognition software to measure demographic and certain internal traits about a candidate during an interview: their IQ, personality or even criminality. They sell this software to HR departments across the United States.

Customer surveillance & screening

Rite Aid deployed facial recognition in over 200 of its stores in low-income, non-white neighborhoods in Los Angeles and New York City. Until they announced their decision to no longer use this tech in 2020, no citizen knew that they were being identified this way.

Facial recognition in other countries

China has integrated facial recognition into every citizen’s waking moments, creating a mass surveillance state. The way people function in the country relies on their ability to be identified with their face: facial scanning is used to access the internet, pay for goods or take the train. Not only are citizens reliant on this technology to live, their behaviors are being monitored and tracked by the government.

Consequences of the bias

As one can imagine, Joy Buolamwini wasn’t the first person of color to be inaccurately identified by facial recognition: the results have ranged from humiliation to wrecking lives. As shown below, it can be a means for perpetuating harmful racial biases in the United States and abroad.

Wrongful Arrests

Nijeer Parks was wrongfully arrested due to a false match. He was arrested on charges of shoplifting and attempting to hit a police officer with a car. Parks was 30 miles away at the time of the incident.

He experienced major losses as a result: 10 days of sitting in jail and spending $5,000 on legal defense and court fees to challenge the city of Woodbridge, N.J.

False Imprisonment

Jacksonville, Florida law enforcement arrested Willie Allen Lynch in 2015 for selling $50 worth of cocaine through use of the facial recognition tool FACES (Face Analysis Comparison Examination System). Undercover agents photographed a person selling the cocaine, but the detectives were unable to identify the person in the photo, and turned to FACES to assist them.

Willie’s photo was one of 4 potential matches with the photo, and unfortunately, the person overseeing the system incorrectly verified that Willie was the same person in the photo. He was arrested and sentenced to eight years in prison. His arguments for appeal that he was misidentified were rejected in 2018.

Loss of employment

Multiple Black Uber drivers in Britain have experienced job loss after the facial recognition system that Uber uses to verify drivers, locked them out of their account because they couldn’t identify the driver. As a result, their accounts were eventually deactivated.

The Independent Workers’ Union of Great Britain (IWGB) is backing one Uber driver who has filed an employment tribunal claim, stating that the software illegally terminated his account. IWBG claimed at least 35 other drivers had had their accounts with Uber ended as a result of alleged mistakes with the software, which was made by Microsoft.

Absence of regulation in the U.S.

Currently, there are no major regulations or oversight on the use of facial recognition in the U.S. This absence speaks to a larger issue of the United States' significant gap in legislation: a federal law that protects people’s data and privacy.

This absence explains why our images are being used without knowledge or consent by law enforcement and certain tech companies. It’s why people are getting sentenced to crimes they didn’t do, or why even a teen girl was barred from entering a rollerskating rink. In short, our freedom, privacy and rights presently hang in the balance until effective legislation is rolled out.

The future ahead of us

Joy Buolamwini’s work and activism hasn’t been in vain. In the wake of her congressional hearing and many other activists’ and experts’ efforts, cities are banning the use of facial recognition until oversight and law has been put into place. Amazon put a moratorium on their software that they’d sold to law enforcement ( the same that the ACLU had tested).

Until federal laws are in place, we must push our local congress to address this issue and protect our society from mass surveillance, wrongful arrests and imprisonment, serious violation of privacy and harmful biases.